My notes on different algorithms used to solve variety of problems in computer science.

Binary Search

Handbook on Binary Search.

Template on solving problems.

Eytzinger Binary Search:

-

Core idea - if the access pattern is root of the tree and then one of its two children, put those children physically close to the root, so they’ll all be on the same cache line.

-

BinaryHeaph - An implicit BT.

Graph Traversal Algorithms

BFS :

- MIT-BFS notes

- Multi-Source BFS

DFS :

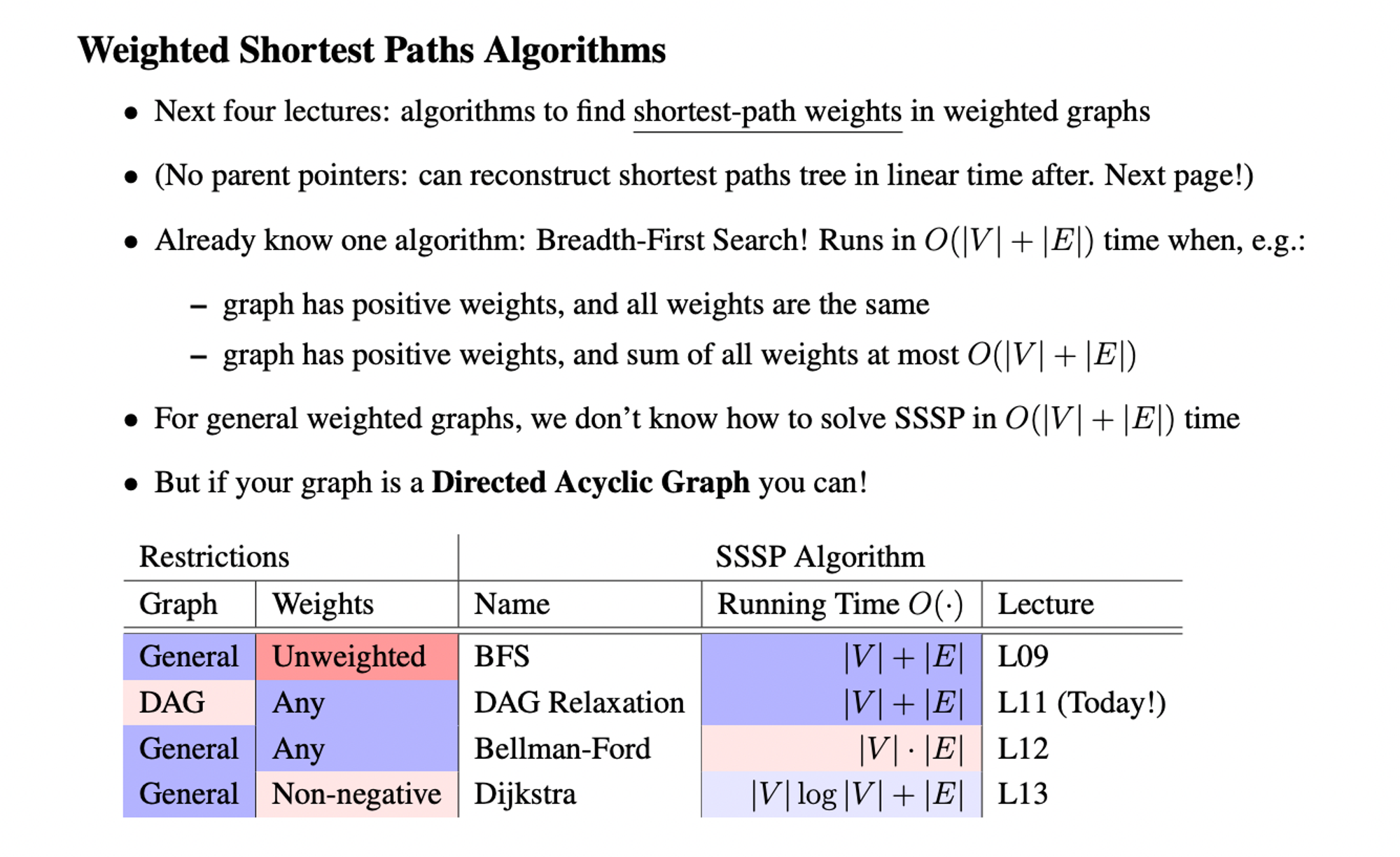

Weighted Shortest Path algos :

-

Different traversal algorithms.

-

Difference between Bellman-Ford vs Dijkstra vs Floyd-Warshall algorithm.

Dynamic Programming

Recursive/DP Algorithm Design Paradigm (SRTBOT) :

-

Subproblem definitions → The hardest part, to come up with similar subproblems.

-

subproblems can be defined/visualized in terms of

prefixes[:i],sufixes[i:],substrings/subarrays s[i:j]. -

ex, in LCS, prefix array would be to start from the last n…0,

text[row][col]and ask which one to pick next?,1 + text[row+1][col+1]ormax(text[row][col+1], text[row+1][col]). -

and suffix array would be to start from 1..n and check have we already calculated an LCS before?

1 + text[row-1][col-1]ormax(text[row-1][col], text[row][col-1]). -

Relate subproblems solutions recursively.

-

Topological order on subprobs to guarantee acyclic relationships,

Directed Acyclic Relationship. -

Base case of recursion.

-

Original problem: solve via subproblems.

-

Time analysis.

Tips :

-

Generally a bottom up(tabulation) approach is more optimal wherein you calculate the results of smaller subproblems first and then move up to the bigger ones. As opposed to recursive/memo solutions in which you start upfront and check for base conditions to exit.

-

Try to figure out which variables to consider for the size of dp table. How can we store intermediate results for those particular values.

-

Try visualising in terms of a directed acyclic graph(topological order), where to reach at node of index i, you connect its related previous nodes(i-1, i-2,…0) based on some condition if there is.

Ex : In LIS wherein you pickdp[j] iff arr[j] < arr[i]wherej -> 0,i. -

DP doesn’t always necessarily mean recursion + memoisation(to reuse the already computed results). In DP you always optimally pick a subproblem and build your way up. Related discussion.

-

For example, in Perfect Squares problem(consider n=12), using recursion, you end up picking up 3 as well, but that is not optimal since

9+1+1+1is not the correct answer. The answer is when you pick 2 (4+4+4) so you in recursion you end up doing extra

computations. This is avoided in DP since you have already picked up the most optimal path(subproblem). In this case, it’s starts with 2.

Reference videos :

- 5 Simple Steps for Solving Dynamic Programming Problems

- Dynamic Programming Explained (Practical Examples)

- Mastering Dynamic Programming - A Real-Life Problem

- Top 5 Dynamic Programming Patterns for Coding Interviews - For Beginners

Helpful resources :

Few examples :

- Longest Common Subsequence

- Minimum Edit Distance

- Min-Max dp kinda problem.

- Maximum Profit in Job Scheduling

- LCS&LAP, LIS, Russian Envelopes, Box Stacking(Max Height of Cuboids), Perfect squares, Coin Change, Largest Divisible Subset, Number of dice rolls with target sum, longest ideal subsequence.

- Path Problems : Minimum path sum, Num Unique paths, Shortest distance in bin matrix, sum paths in matrix divisible by k.

Recursion

Typically in recursion, the over all time complexity is the (number of calls or number of branches at a particular level, the branching factor) ^ (the depth of those branches, i.e the height, how deep you are branching it further).

For example in Binary Tree, if you have a branching factor of 2 then TC : 2^N where N=num of nodes in the tree. You can optimise this by maintaining a memo so if you find a pre-computed result, just return it which decreases the TC to O(N).

Understanding recursion :

Visualize recursion :

Bit Manipulation

- Notes on bit manipulation techniques.

- Examples

- Patterns.

- Bitmaps/Bitsets usecase

- Simple bitmaps implementation in golang.

Bloom Filters

- A nice visualization

- Bloom filters usage

- More on bloom filters.

Topological Sort

- Intro to topological sort

Hashing algorithms

- DHash algorithm.

Cache Eviction Algorithms

- algorithm for implementing LFU cache eviction scheme.

- Improvements on FIFO as compared to LRU based on the power of lazy promotion and quick demotion

- Sieve eviction algorithm and its implementation in python.

- Adaptive Cache Replacement algorithm.

- Cache algorithms implemented in python

Special/Extra Algorithms

- Newton’s method to find sqrt

- Lagrange’s four square theorem

- Brian Kernighan’s algorithm to count num of set bits

- Probabilistic Morris Counting algorithm

- Expression Evaluations

- Substring search algorithms available.

- Maze following algorithms.

- Problem on Fermat’s two square theorem.

- Algorithm behind Spell Checkers

Appendix

- 6.006 MIT - Intro to algorithms

- Challenging data structures and algorithms.

- Advanced DSA.

- Algorithms for Competitive programming

- Algorithms practice

- Algorithms gitbook

- Algorithms by Jeff Erickson

- Algorithms by Simon Tatham